- 2 Minute Streaming

- Posts

- kafka drops disks

kafka drops disks

KIP-1150 brings 20x cheaper storage and infinitely cheaper data transfer fees

As we covered last time - open source activity is absolutely buzzing.

Since then, it’s only been getting louder. April recorded the highest number of KIPs ever submitted to Kafka in a month, and among them was KIP-1150: Diskless Topics! 🏆

This is a pretty large KIP, driven by Aiven. It’s not yet accepted, but it is seeing heavy investment, is well thought-out and is the next logical step for Kafka. It’s very likely it’ll get accepted in one way or another.

That being said - it’s still WIP in the DISCUSS stage. Things mentioned here are subject to change.

What is Diskless?

In case you’ve been living under a rock, the Kafka space has been on a crazy cost-deflation and infra-simplification frenzy for the last two years.

It all came from these simple realizations:

❌ Cloud Providers make a killing on charging us for cross-zone data transfer fees and disks.

❌ Kafka’s replicated design means we pay 3x the disk fees and up to 4.6x the throughput in cross-zone fees (in replication and client to broker transfer)

✅ S3 charges you ~20x less for storage (compred to EBS/disks), plus the replication and client traffic come for free.

💡 by decoupling data from metadata and writing directly to S3, you can achieve a leaderless (active-active broker) design that:

eliminates disks entirely.

eliminates broker→broker replication.

eliminates all client→broker cross-zone transfers too.

The result? Your storage costs ~20x less, your cross-zone data transfer costs ~infinitely less (they’re zero).

Diskless 101

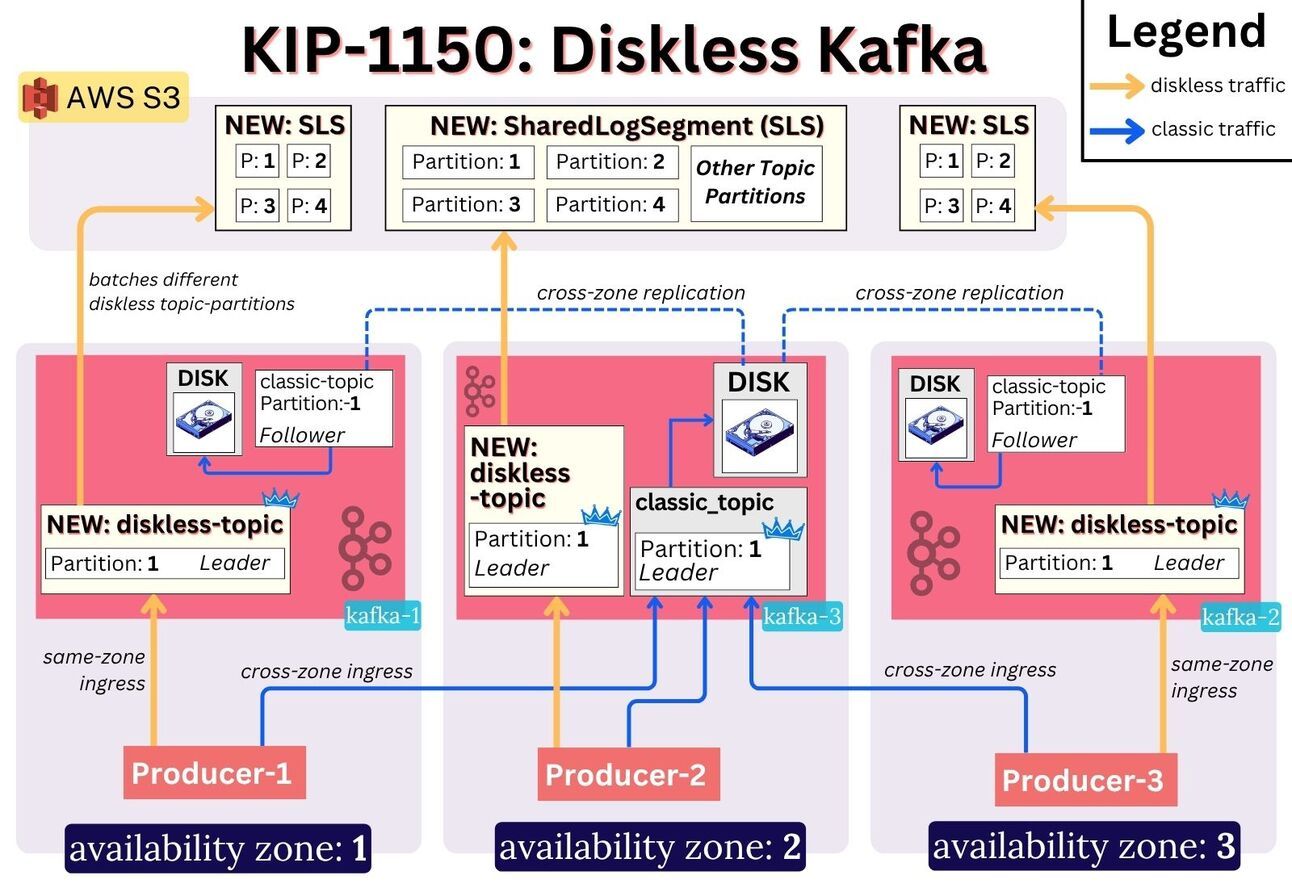

This isn’t a new cluster type like WarpStream - it’s simply a new type of topic in Apache Kafka. Diskless topics is the name.

When enabled (via diskless.enable), the topics will:

be leaderless - any broker can serve produce/fetch requests for their partitions

write directly to S3 - no replication for diskless topics between brokers

(I say S3 for clarity, but it works with any cloud object store like GCS or Azure’s)

store a SharedLogSegment file in S3 that consists of many different partitions’ data from that time period.

(unlike today where a segment has only one partition’s data)

scale up/down instantly and easily - when you start up new brokers, clients writing to diskless topics will naturally and automatically rebalance to them.

Diskless Topics architecture

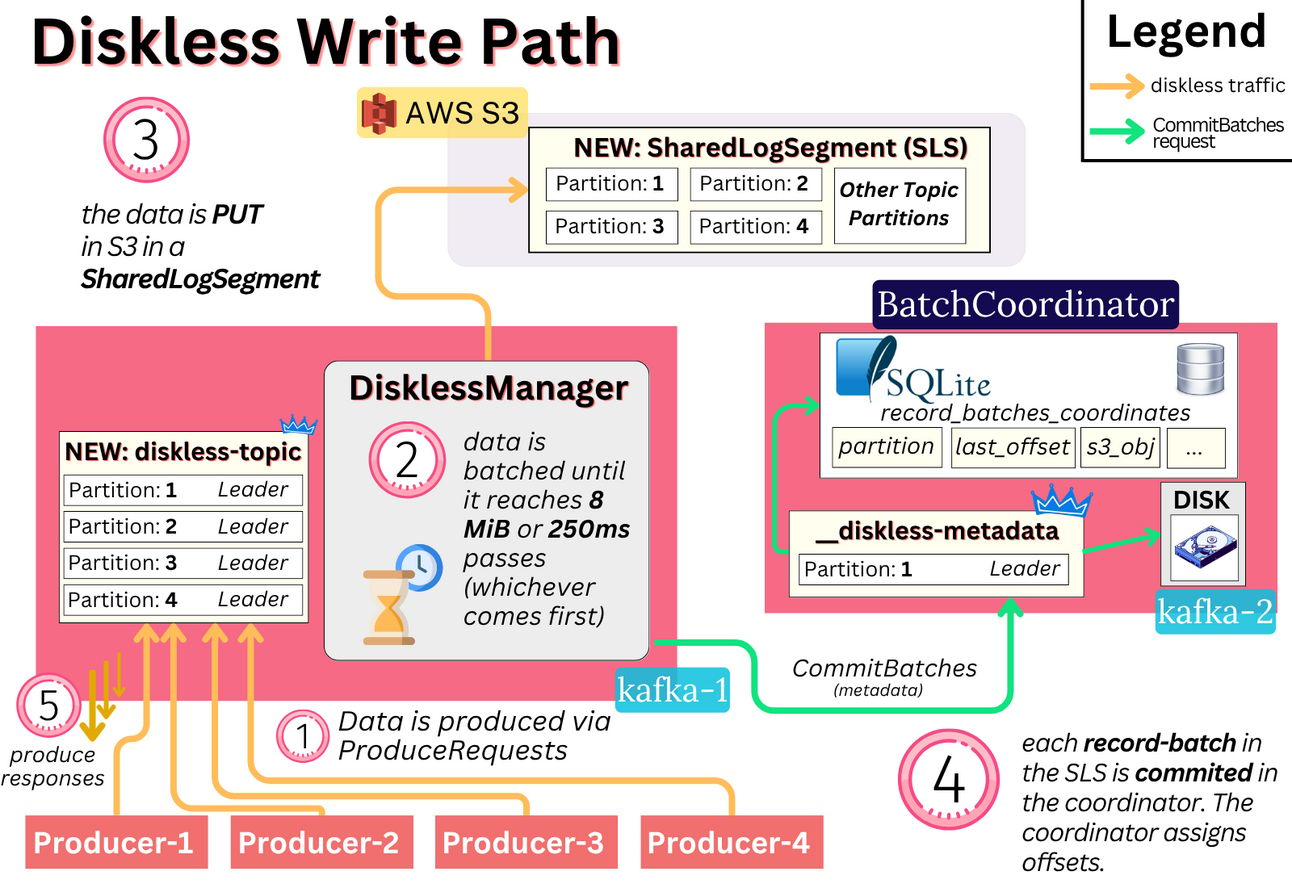

Write Path 101

producers bootstrap and receive a (modified) metadata response containing the one leader broker they should write to.

brokers accumulate data from producers for either 250ms or 8 MiB (whichever comes first; configurable), then persist it in a SharedLogSegment (SLS) to S3.

The broker first writes the SLS to S3, then sends a request to the BatchCoordinator to commit the offsets for the data.

at the time of writing to S3, the data does NOT have offsets.💡

The BatchCoordinator acts as a sequencer and persists the offsets in its backing store. It essentially stores the coordinates for an offset - “which file in S3 contains this record?”

It’s a pluggable interface and the plan is to have a default implementation backed by a topic called

__diskless-metadataand using SQLite as a way to materialize it and enable efficient queries on it.Aiven currently have a working implementation using just Postgres.

The write-capable coordinator is the leader of the

__diskless-metadatapartition. The replicas of the partition are read-capable coordinators.

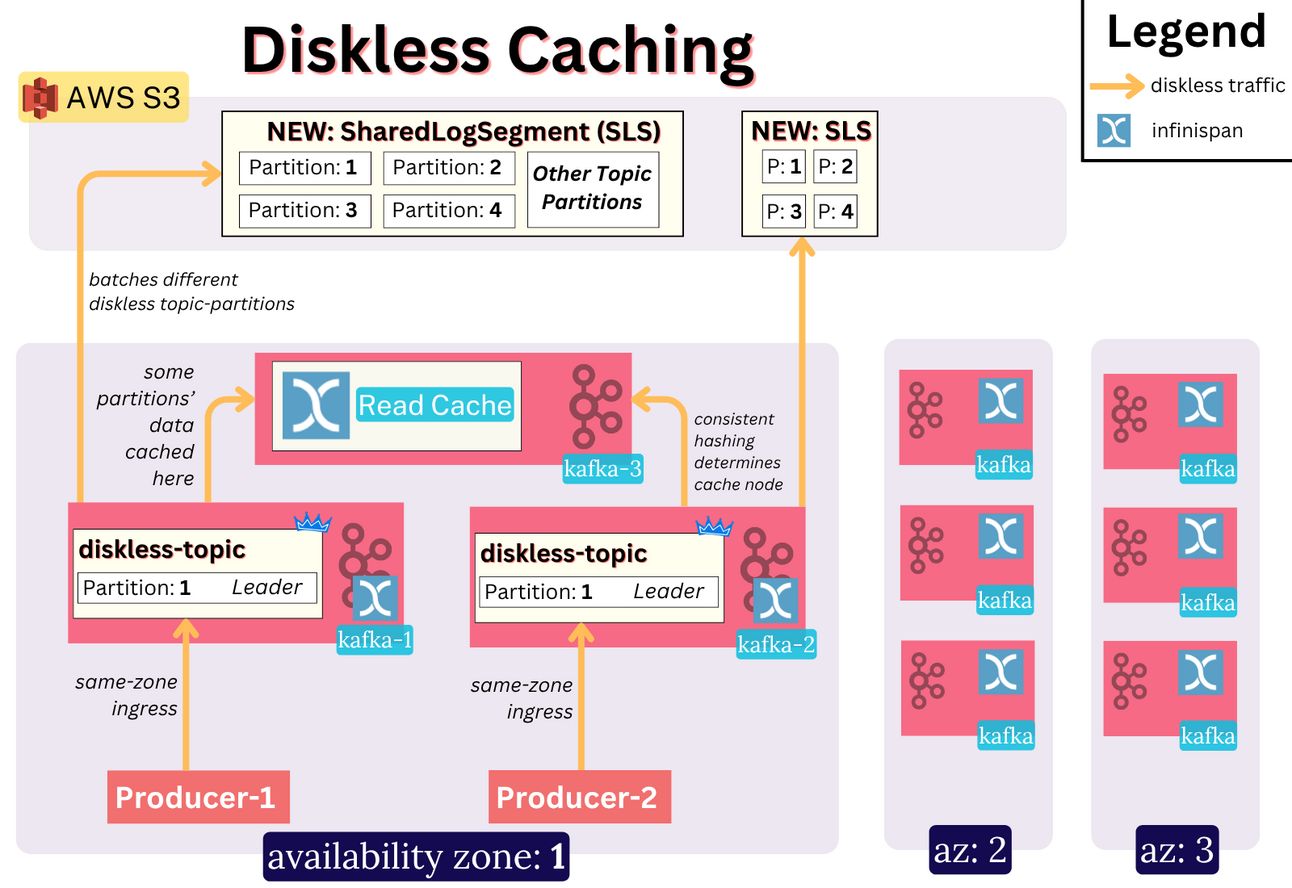

a distributed cache is used so the reads are efficient

each AZ has a copy of the cache of the segments

it uses the Infinispan distributed cache library

on writes, the cache is also populated. This means a broker writes simultaneously to the cache and to S3

the file stored in S3 is a SharedLogSegment, consisting of many different partitions’ data from that time period.

(unlike today where a segment has only one partition’s data)

periodic merging of the small SLS files into larger ones will be done (KIP is still very unclear)

For more on the architecture, see Aiven’s docs.

Inkless vs Diskless

Disklesss is the open source KIP-1150.

Inkless is Aiven’s MVP implementation, which they also open sourced (under APGL).

There are differences - Diskless is not yet fully designed/implemented, and Inkless is.

Inkless uses Postgres as the batch coordinator, directly utilizing Postgres functions.

Inkless uses the Distributed Infinispan Cache; Diskless doesn’t even have a KIP for this.

Aiven is not accepting patches in the Inkless repo - their goal is to delete the repo once the KIP gets merged. But they want to work in public (which is really cool)

Most interesting in that repo is their commit history. It seems like they started work on this ~8 months ago, with a team of just 4 people. That’s realy impressive progress.

🤨 What’s the Catch?

Latency.

Latency suffers massively in this Diskless architecture.

The Inkless repo explains the latency breakdown well:

Writes consist of:

commit interval - up to 250ms by default (

inkless.produce.commit.interval.ms)S3 PUT latency - 200-400ms p99 latencies for 2-8MB segments

Batch Coordinator commit - 10-20ms p99 for the Postgres implementation

Reads consist of:

Finding the (S3) record-batch coordinates for the given offsets

~10ms p99

Planning the fetching strategy (what comes from cache, what from S3)

Fetching from S3 and Cache

p99 of ~100-200ms for 2-8MB objects

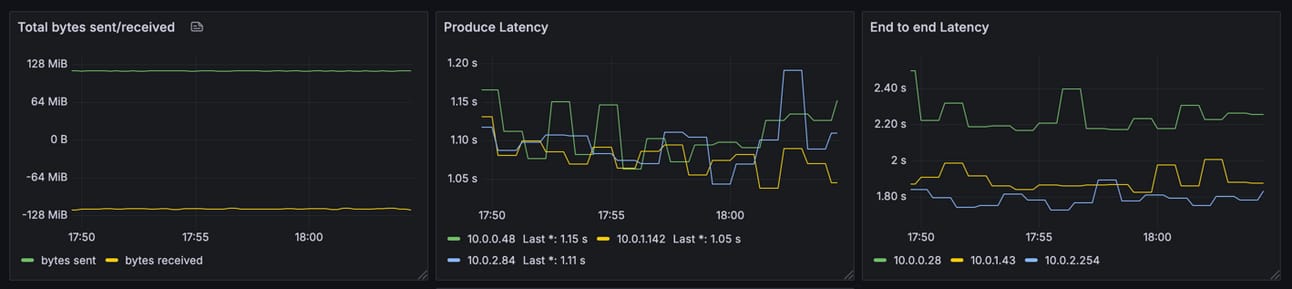

Given this data, you’d expect a p99 latency of at most ~670ms for writes and ~250ms for reads. An end to end latency of no more than 1000ms.

Despite that, the doc in the Inkless repo shows higher e2e latency of up to 2.4 seconds - perhaps pointing towards opportunity for optimization.

the performance graphs shared in Inkless

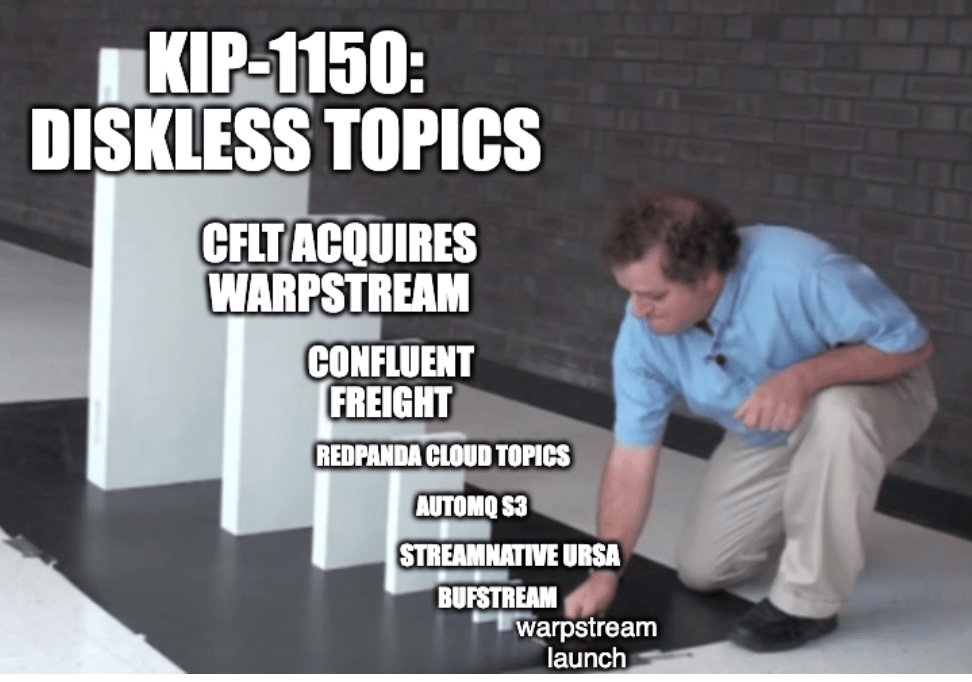

The Annals of Diskless

Diskless’ brief history shows how WarpStream forever changed the Kafka space with their launch.

August 2023: WarpStream launched

May 2024: Confluent Freight announced

May/July 2024: StreamNative Ursa, Bufstream, AutoMQ S3 launched

Sep 2024: Confluent buys WarpStream for $220M; RedPanda announced intention for Cloud Topics

April 2025: KIP-1150 was published by Aiven

May 2025: Aiven releases the code for KIP-1150 (under AGPL) and offers it in their BYOC product.

Why Does Diskless Matter?

⭐️ Open Source - because it’s open source, you are not locked in to any vendor. Also - it allows you to actually pocket the cost savings.

💸 Cost - Diskless Kafka will cost you 11.2x less in infra fees than e.g. Confluent Freight ($100k/yr vs $2M/yr)

Note that in Azure (where inter-zone networking is free) Diskless does not materially reduce the bill.

Also note that under a certain threshold, the Diskless approach is not cheaper (because of the S3 PUT overhead)

🌞 Simplicity - because it’s stateless, you eliminate a whole host of issues (IOPS, disk space, rebalancing partitions)

✨ Flexibility - Kafka will be the only system in the market to support both slow and fast topics in one cluster.

🍱 Single Takeaway

With Tiered Storage, Networking is 83%+ of a Kafka deployments cost. ($882k/year out of $1.05M/year)

With Diskless, the networking cost disappears.

The cost goes down to ~200k/yr - a 5x reduction. 🏆

🎬 Fin.

That’s it for this edition. Here is what else I can offer:

⭐️ Diskless Solutions Comparison (coming soon) - I will publish a long-form breakdown and comparison of every diskless solution out there. Subscribe to my long-form newsletter to not miss that - ✅ https://bigdata.2minutestreaming.com/.

⭐️ Technical Details about Diskless (2:20hr podcast) - there is no better technical deep dive into KIP-1150 out there on the internet. Make sure to watch it if you’re interested 👇

LIKED THIS?

If you find this content valuable, subscribe for more:

More Kafka? 🔥

Here’s what we posted on social media most recently:

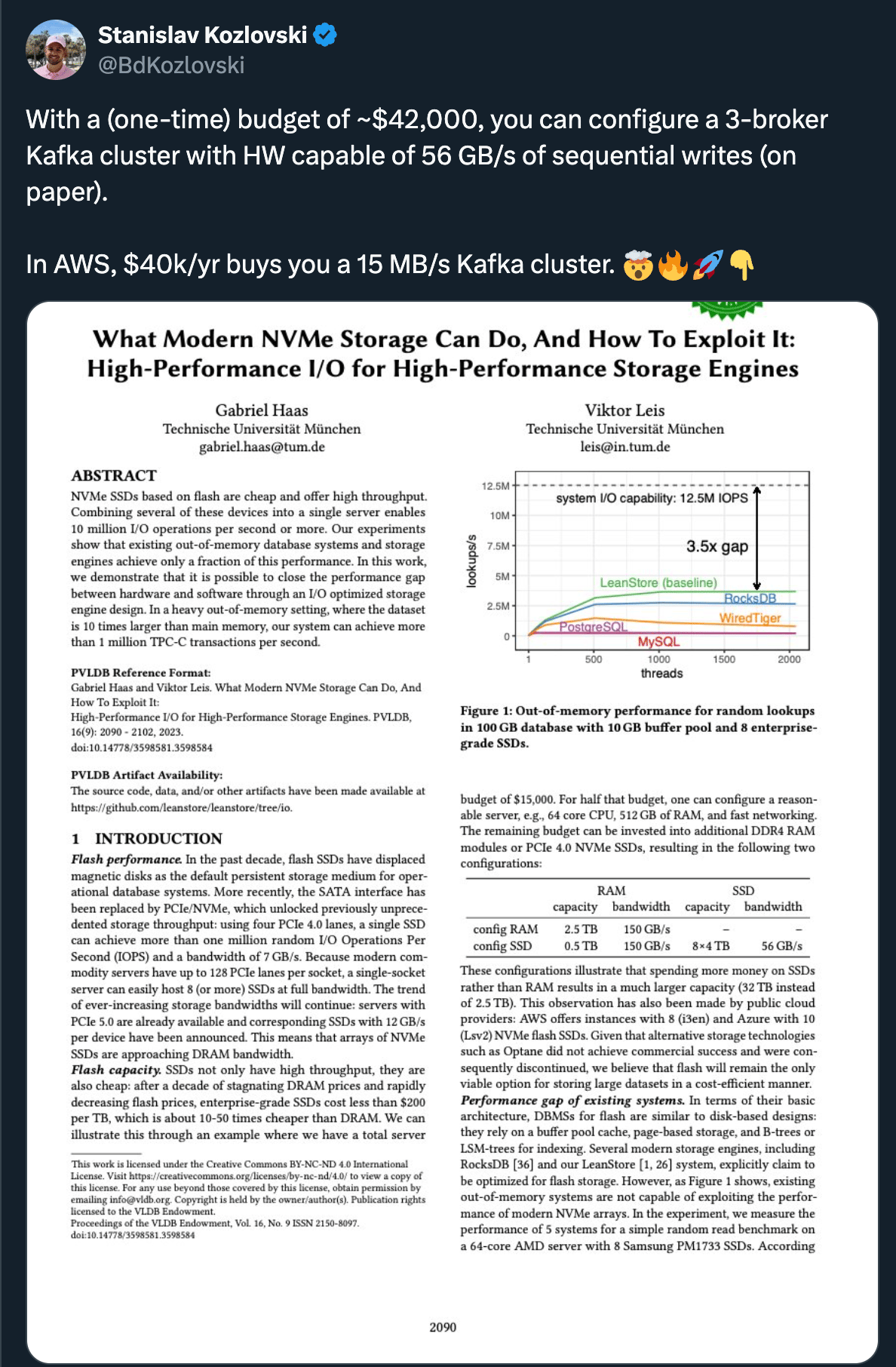

NVMe Hardware advances | LinkedIn | X (Twitter)

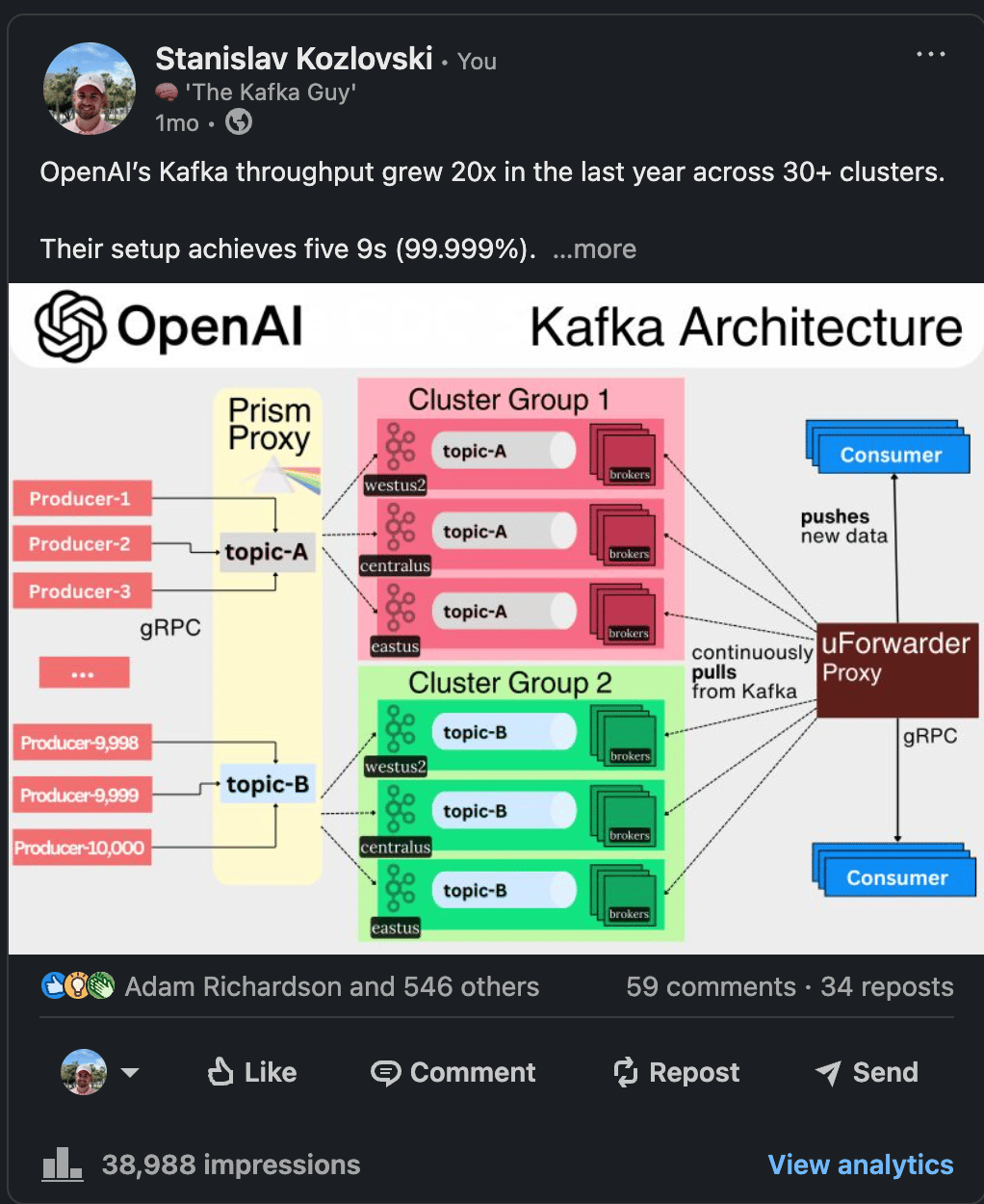

OpenAI’s Kafka Architecture

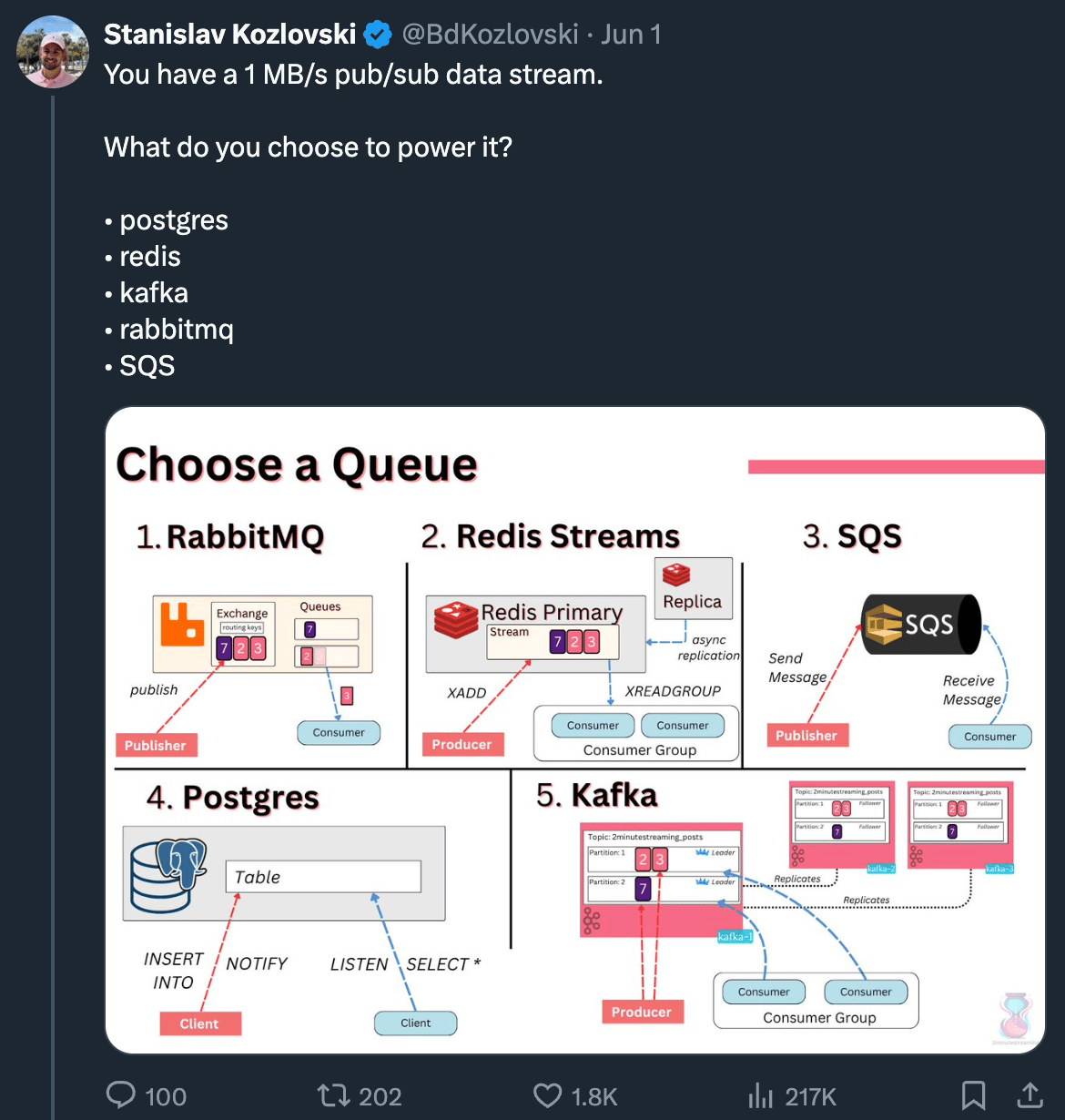

What Queue do you choose

(this created some great discussions on hackernews too)

Low Latency Use Cases

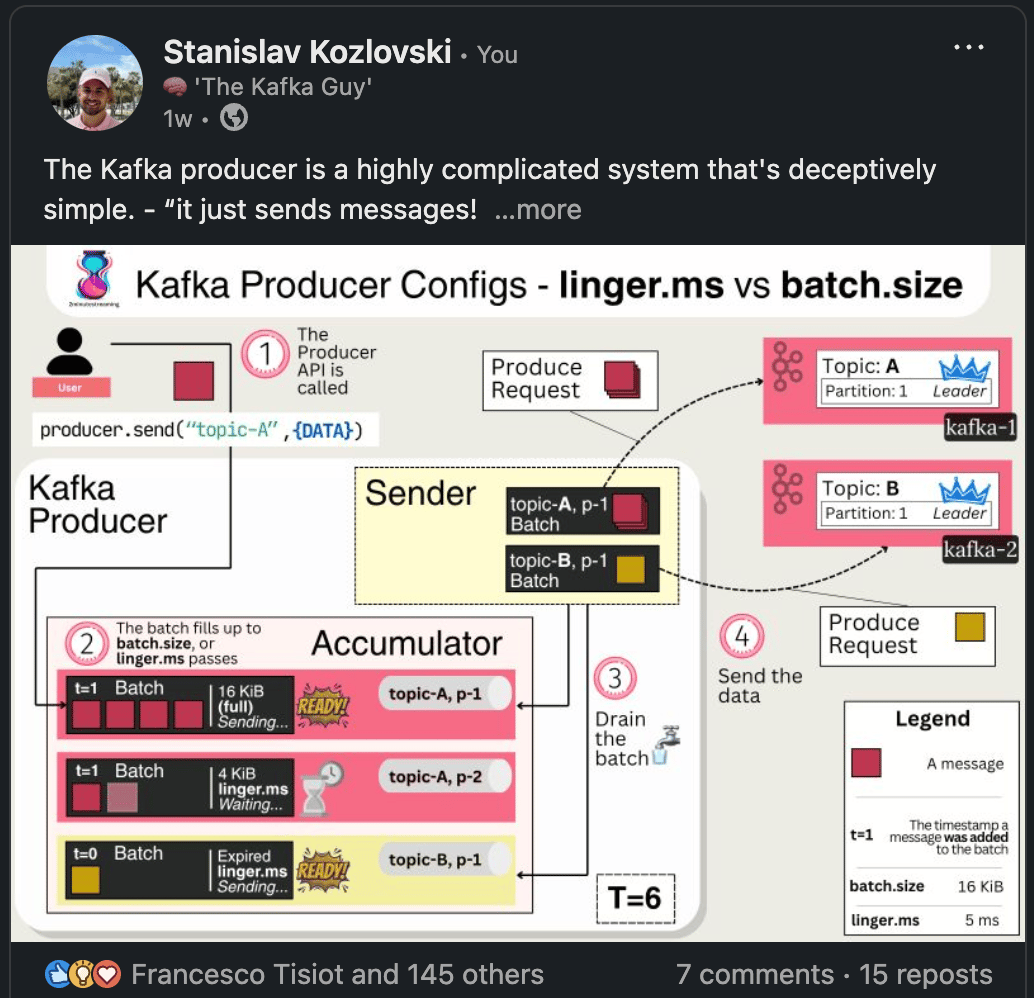

Batch Size vs Linger Ms

Kafka’s Schema Problem

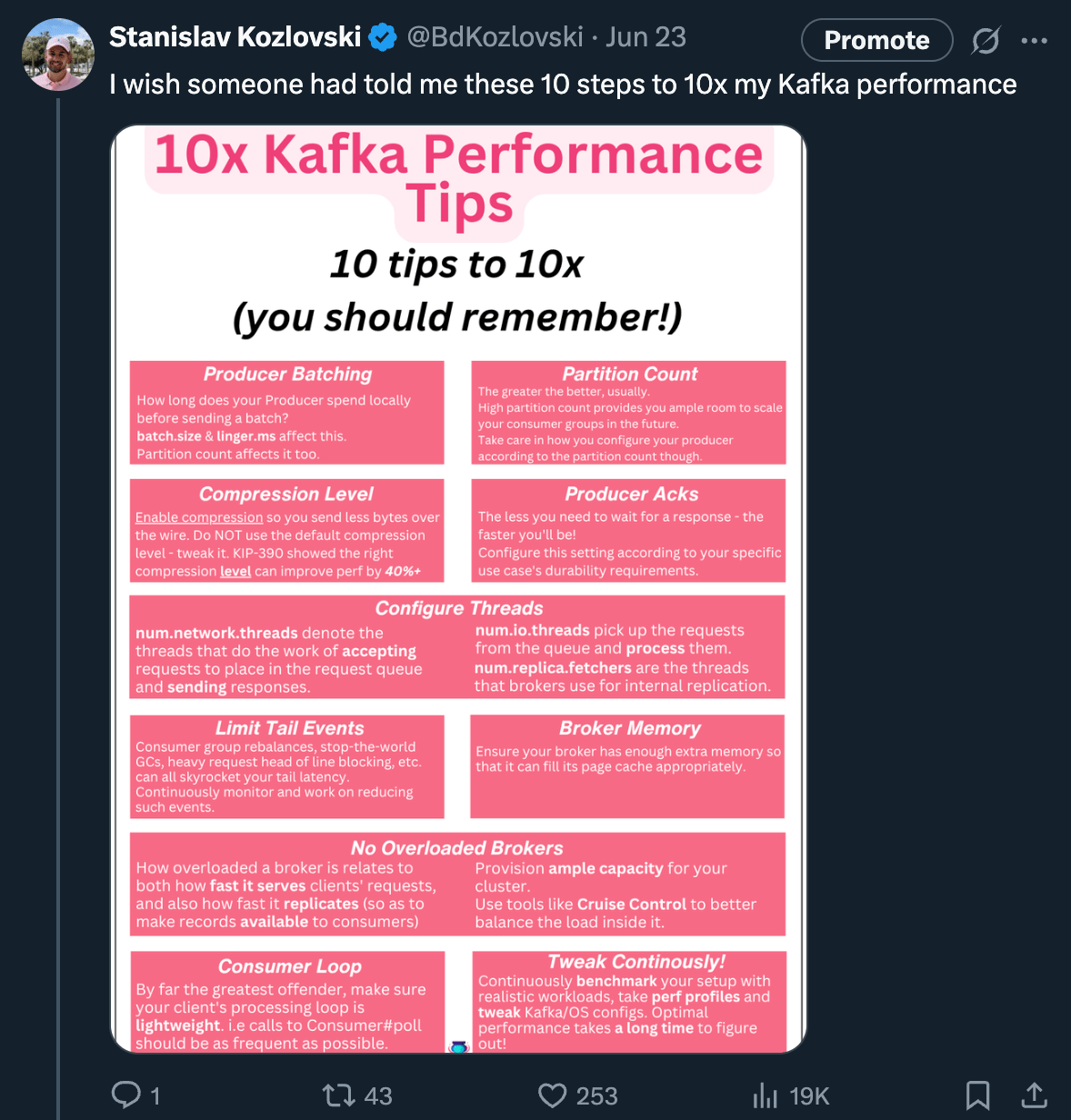

10 tips to 10x your Kafka performance | LinkedIn | X (Twitter)

4 Ways KIP-405 Serves Reads Fast 🔥

Apache®, Apache Kafka®, Kafka, and the Kafka logo are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by The Apache Software Foundation is implied by the use of these marks.