- 2 Minute Streaming

- Posts

- just use postgres

just use postgres

Kafka vs Postgres? Here's why you don't need Kafka (all the time)

Small Data

There’s this movement going around that says:

Big Data is a scam. You aren’t using that much data:

99.9% of real world BigQuery queries can run on a single machine.

Most apps will never see 1TB of data.

Modern hardware is really good:

a 2025-era AMD CPU has 192 cores 👀

in the last 10 years, core count went up ~10x and price per core went down ~5x, not to mention cores increased in speed;

therefore 👉 you don’t necessarily need complex, high-perf specialized distributed software for every use case

Just Use Postgres

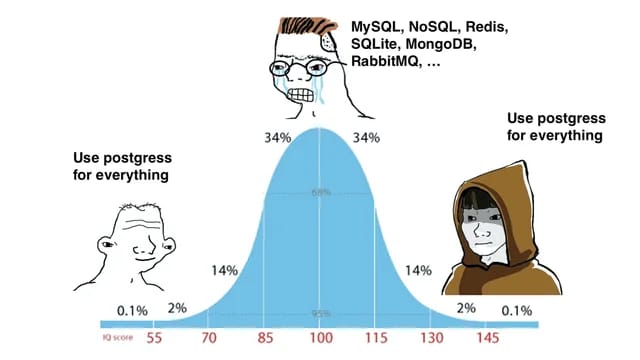

Hence, a meme was born:

The meme is intentionally overly-simplistic, but it’s rooted in a very well-reasoned argument most vendor-employed will never admit.1

The argument in 2-minute summary is:

If your team is already using Postgres, then before adding another system to your stack, check if Postgres can support it. Chances are - it can. (unless you’re at Google scale)

Because Postgres is so extendable and mature, a lot of features that compete with auxiliary systems have been introduced:

Redis →

CREATE UNLOGGED TABLEMongoDB →

jsonbElasticSearch →

tsvector / tsquerySnowflake/OLAP →

pg_lake / pg_duckdb / pg_mooncakeAI Vector DBs →

pgvector / pgaiQueues →

pgmqClickhouse →

timescaledb2

For a longer explanation - a whole book was written on the matter. Until then, we remain with memes:

What About Kafka?

This is a Kafka blog after all. I ran some tests and found that a minimal 3-node Postgres setup (c7i.xlarge) can sustain a Kafka workload of ~5/25 MiB/s:

Write Bandwidth | Write Msg/s | Write p99 / p95 |

|---|---|---|

4.9 MiB/s | 5015 msg/s | 153ms / 7ms |

Read Bandwidth | Read Msg/s | Read p99 / p95 |

24.5 MiB/s | 25073 msg/s | 57ms / 4.9ms |

I even got it running up to 240 MiB/s in and 1.16 GiB/s out! See the full results here.

Pretty good for a B-Tree! Sure, Kafka could do a lot more with the same hardware. But if you are like 55% of this survey respondents and use Kafka for < 1 MiB/s - do you care?3

The point of the matter is that a majority of people default to Kafka without thinking - is this tool overkill?

The Missing Link

Kafka is immensely powerful, and very useful! It has a lot of features Postgres doesn’t - the Connect ecosystem, the schema evolution, tiered storage, Diskless.

But… if you don’t need those features - isn’t Postgres just as good?

Yes and no.

Yes: technically, the storage engine is good enough.

No: the UX isn’t there. You need to build your own client (SQL INSERT statements) and your own table setup. It isn’t that much work (I did this in my tests), but rolling your own contraption isn’t everyone’s cup of tea

I am on record saying someone should create a pg_kafka extension. Until then, we have the following projects:

queen (brand new, looks promising)

message-db (last commit was 3y ago)

Tansu - a diskless Kafka broker that can use Postgres as a storage back end (Kafka API). I recorded a 3hr30m podcast with the creator recently.

More Kafka vs Postgres?

Of course, it’s a nuanced discussion. I posted a lot more words about this in my other blog.

It got very popular and ranked front page on HackerNews for two days:

So much, we got a response out of Confluent:

MEME: Postgres Was Made for the Log

Elephants have served as working animals for millennia across Asia and parts of Africa, most notably in logging operations where they haul massive teak logs through dense forests in Myanmar, Thailand, and India—work they performed for centuries before mechanization.

Help support our growth so that we can continue to deliver valuable content!

And if you really enjoy the newsletter in general - please forward it to an engineer. It only takes 5 seconds. Writing it takes me 5+ hours.

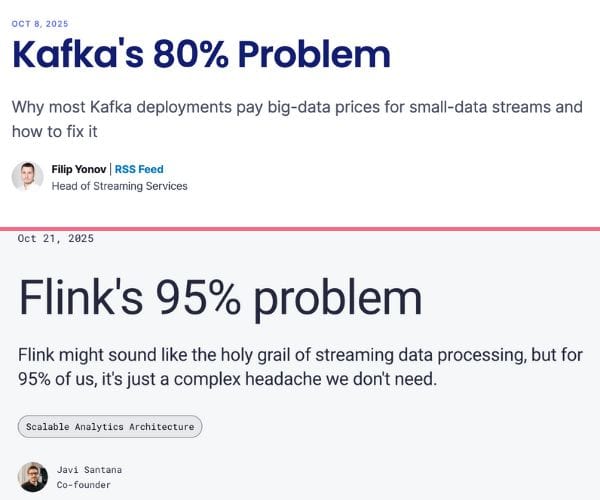

😳 Kafka’s 80% Problem and Flink’s 95% Problem

“Kafka for 250 KB/s?Flink for a meal-planning AI Agent sending 2 msg/s?

Absolutely absurd. It's high time we stop recommending these overkill big data solutions for small data problems.”

💀 IBM Acquiring Confluent for $11B

This is a major L. But it was coming…

💀 The Death of Kafka (as a business)

Seriously, we are in weird times for Kafka as a technology. It’ll remain in wide use, but I think we have either passed its peak, or are close to it. I have to find more time to talk about it.

(reply to this email if you have thoughts on the matter)

In any case, I’ll be here to provide commentary until the end :)

Apache®, Apache Kafka®, Kafka, and the Kafka logo are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by The Apache Software Foundation is implied by the use of these marks.

Postgres is an elephant.

1 They won’t admit it because they’re literally employed to sell you something else. Remember - everyone is talking their book. In what world would it be reasonable for someone selling you something to admit that (for your use case) commodity software (Postgres) does the job just as well as their overly-specialized and highly-marketed system?

2 Cloudflare recently boasted how, despite being a heavy Clickhouse user, just using Postgres and the timescaledb extension served them more than well - https://blog.cloudflare.com/timescaledb-art/

3 Before you say that survey isn’t representative, Aiven also shared that 50% of their users have an ingest rate below 10 MiB/s. My experience also confirms a majority of people are using Kafka at miniscule scales.